AI must be kept under control: researchers

The concept of a rogue AI posing a threat to the future of the species is nearly as old as science fiction itself, but two University of Sydney researchers believe we need to start planning now to prevent this from becoming a reality.

In the wake of the defeat of the world champion Go player by Google’s AlphaGo AI, the researchers have warned that AI must be kept under control lest we become defenseless against its capabilities.

Go is believed to be the most complicated game commonly played today, with more possible patterns of moves than there are atoms in the universe. The defeat of a human expert by a machine learning program has thus been hailed as a significant advance in the field of artificial intelligence.

But the university’s chair in computer engineering from the School of Electrical Engineering, Professor Dong Xu, noted that the development raises questions about how much we should control AI’s ability to self-learn.

“It’s said that a person is able to memorise 1000 games in a year, but a computer can memorise tens of thousands or hundreds of thousands during the same period. And a supercomputer can always improve — if it loses one game, then it would analyse it and do better next time,” he said.

“If a supercomputer could totally imitate the human brain, and have human emotions such as being angry or sad, it will be even more dangerous.”

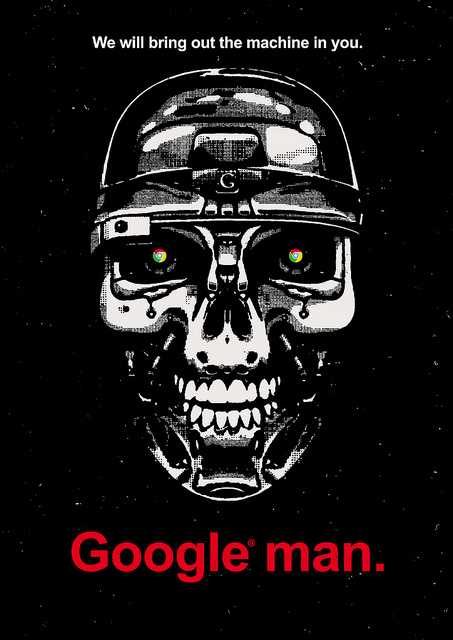

He said Google and Facebook must set up moral and ethics committees to ensure that research into AI doesn’t accidentally create machines that act maliciously.

Dr Michael Harre, a senior lecturer in complex systems who spent years studying the AI behind Go, an ancient Chinese board game, agreed that the development raises concerns.

“The technology has developed to a point that it can now outsmart a human in both simple and complex tasks,” he said.

“This is a concern because artificial intelligence technology may reach a point in a few years where it is feasible that it could be adapted to areas of defence where a human may no longer be needed in the control loop: truly autonomous AI.”

Big AI in big business: three pillars of risk

Preparation for AI starts with asking the right questions.

Making sure your conversational AI measures up

Measuring the quality of an AI bot and improving on it incrementally is key to helping businesses...

Digital experience is the new boardroom metric

Business leaders are demanding total IT-business alignment as digital experience becomes a key...